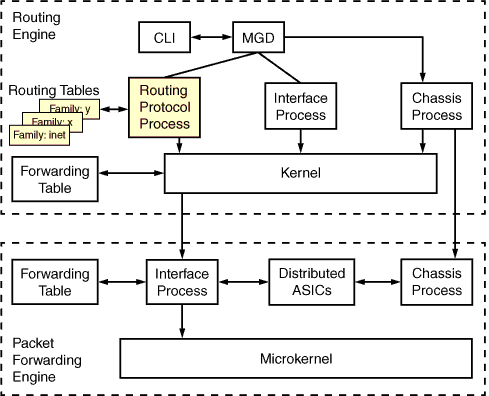

Let me start with couple of statements that would explain the issue in broader terms. JUNOS is system based on fairly old version of FreeBSD UNIX (I think something like version 4.X). The BSD serves as the underlying layer for services that run like daemons on top of the OS. This is great for many reasons as you can do thing like separate various functions completely in daemons. Or you can use some existing BSD packages without much work allowing for faster implementation of needed features. Also BSD in general is quite good for for the way it treats the kernel/network stack (which is different from Linux). So how does this look like? In the traditional JUNOS the logic would be something like this:

Let me start with couple of statements that would explain the issue in broader terms. JUNOS is system based on fairly old version of FreeBSD UNIX (I think something like version 4.X). The BSD serves as the underlying layer for services that run like daemons on top of the OS. This is great for many reasons as you can do thing like separate various functions completely in daemons. Or you can use some existing BSD packages without much work allowing for faster implementation of needed features. Also BSD in general is quite good for for the way it treats the kernel/network stack (which is different from Linux). So how does this look like? In the traditional JUNOS the logic would be something like this:

Well this all looks quite nice but running things like this has some not-so-obvious limitations. As you can see the kernel binds all the daemons together and as many of the system services daemons are basically just BSD packages with minor alterations the don’t necessarily have any hooks into Juniper’s custom daemons. This combined with the fact that most network functions are actually done through the Juniper’s RPM daemon results in reliance on the base kernel functions for all the non-juniper daemons. The main issue with this is that the Kernel is not VRF/routing instance aware and exist in the simplistic world of one routing instance – default/inet.0.

The implication is that all the system services must run in default/inet.0 which is something you might not want to do as that might be the default routing instance for your data plane. So if you want a total data/management/control plane separation you might have an issue. The other problem is that some features of routing protocols and or some specific configurations only work in the default routing instance (for the same reasons mentioned above). So is there a solution to this? Fortunately the answer is yes but it comes with quite a few caveats.

The way how to do this is in very brief form described here but as always this doesn’t explain anything beyond the basic config I will try to provide bit more information based on my testing and deployment.

Interface Loopback 0 unit 0

All the system base services are bound to interface loopback0 unit 0 – this is the only unit that the system is capable of binding the system services to. If yoou create any other unit and miss the unit 0 with any IPv4 address assigned you will get the following message (in this case for ntp):

root> show ntp status /usr/bin/ntpq: socket: Protocol not supported root> show ntp associations /usr/bin/ntpq: socket: Protocol not supported

So the first thing is that lo0.0 has to have an IPv4 IP address – I would suggest it to be a non-routeable something like 127.0.0.1/32

root# set interfaces lo0 unit 0 family inet 127.0.0.1/32

So now the system daemons will actually run but as in this setup the routing instance default has no way of talking to the servers. Even though this IP is as good as any For the next step (and the last) we will need one more non-routable address. This time I used something that can appear in the routing table without too much fuzz 192.168.255.255/32.

root# set interfaces lo0 unit 0 family inet 192.168.255.255/32

The obvious question is what is this address good for. We need it for 3 reasons.

- we need to bind the system services to an ip other than localhost in cofiguration

- we need to do source nat (next step)

- this address will exist in the routing table for the return traffic (last step)

System services configuration

Now when we have a working lo0 to bind the services to we should configure the services themselves. This is all done in the system stanza and would look something like this:

system{

name-server{

1.1.1.1;

}

radius-server {

1.1.1.1 {

port{

1812

}

secret {

"$9$V3ryS3cr3td4t4"

}

source-address{

192.168.255.255

}

}

}

radius-options {

attributes {

nas-ip-address{

1.1.1.1

}

}

}

syslog {

host{

1.1.1.1 {

any any;

}

}

source-address {

192.168.255.255;

}

}

ntp {

boot-server{

1.1.1.1

}

server{

1.1.1.1

prefer

}

server{

1.1.1.2

}

source-address{

192.168.255.255

}

}

}

Note that at this point that some of the system services don’t have source configured – those are the ones that automatically bind to lo0.0. Some of those with the source specified are not really using it as expected. As a prime example let’s see what the configuration means for ntp (courtesy of juniper)

source-address—A valid IP address configured on one of the SRX Series devices. For system logging, the address is recorded as the message source in messages sent to the remote machines specified in all host hostname statements at the [edit system syslog] hierarchy level, but not for messages directed to the other Routing Engine.

So this is not only confusing but outright misleading – in effect all the services above must use ipv4 address on lo0.0 as they wouldn’t just work.

Source NAT between default and specific routing instance

Previously we have configured the 2 IP address on the loopback and now we can create a source nat between the routing instances so the system services traffic sourced in the default RI has some way of reaching the servers. The caveat on this source nat is that it must not be set up with source of any (0.0.0.0/0) as if you do this some other system services will stop working as they are sourced from the routing instance default – the prime example being ping and traceroute. You can either use the destination IPs or what I’ve done is to match on protocols. The result should look something like this:

security nat source

pool snat_pool_lo0 {

address {

192.168.255.255/32;

}

}

security nat source

rule-set snat_rs_host_ntp {

from zone junos-host;

to routing-instance ri_prod;

rule snat_rs_host_prod_ntp {

match {

destination-port {

123;

}

}

then {

source-nat {

pool {

snat_pool_lo0;

}

}

}

}

rule snat_rs_host_radius {

match {

destination-port {

1812 to 1813;

}

}

then {

source-nat {

pool {

snat_pool_lo0;

}

}

}

}

... etc.

This source nat is using an pool with an IP address as there are multiple interfaces in the destination routing instance. The aim is to use lo0.X that exist in the instance. Even though the lo0.X should be used for the snat based on the rules the SRX platform does seem to use a bit random approach and it uses the first forwarding interface so usually ge-x/x/x This is ok if you have single uplink and you use the IP from this interface but in any other case this can result in unwanted behaviors. Using the pool fixes this issue despite the intention of it being used for different purposes.

For the NAT to work you also need to add a default route with next-table of the ri_vr (the routing instance that holds all the routes to the servers)

Instance-import to the RI with the actual routing table

This is the last step of the setup – as the source nat has dealt with the outbound

policy-options {

policy-statement import-lo0-route {

term 1 {

from {

instance master;

protocol direct;

route-filter 192.168.255.255/32 exact

}

then accept;

}

term default {

then reject;

}

}

}

routing-instances {

ri_vr {

routing-options {

instance-import import-lo0-route;

}

}

}

As described elsewhere the IP of the Lo0.0 must exist in the routing table of the routing instance that actually has the routes to the servers so when the packets arrive they will be forwarded to lo0.0 and the system will process them. The NAT will not take place even though it should. The reason lies within the NAT being prioritized in different way if you source traffic from the srx itself which is always the case for the above mentioned services.

This is it – no direct access to default routing instance while the services can still be sourced from it. The question is if it is worth the hassle and I would say for most cases: no, it is not. But if you have some corner case or you need to have an added layer of security then this is the way to do it. On a side note – management VRF as a separate routing instance has been finally introduced in the 17.3 releases but as far as I know this only applies to new kit with VM architecture – especially the MX series.